Blog

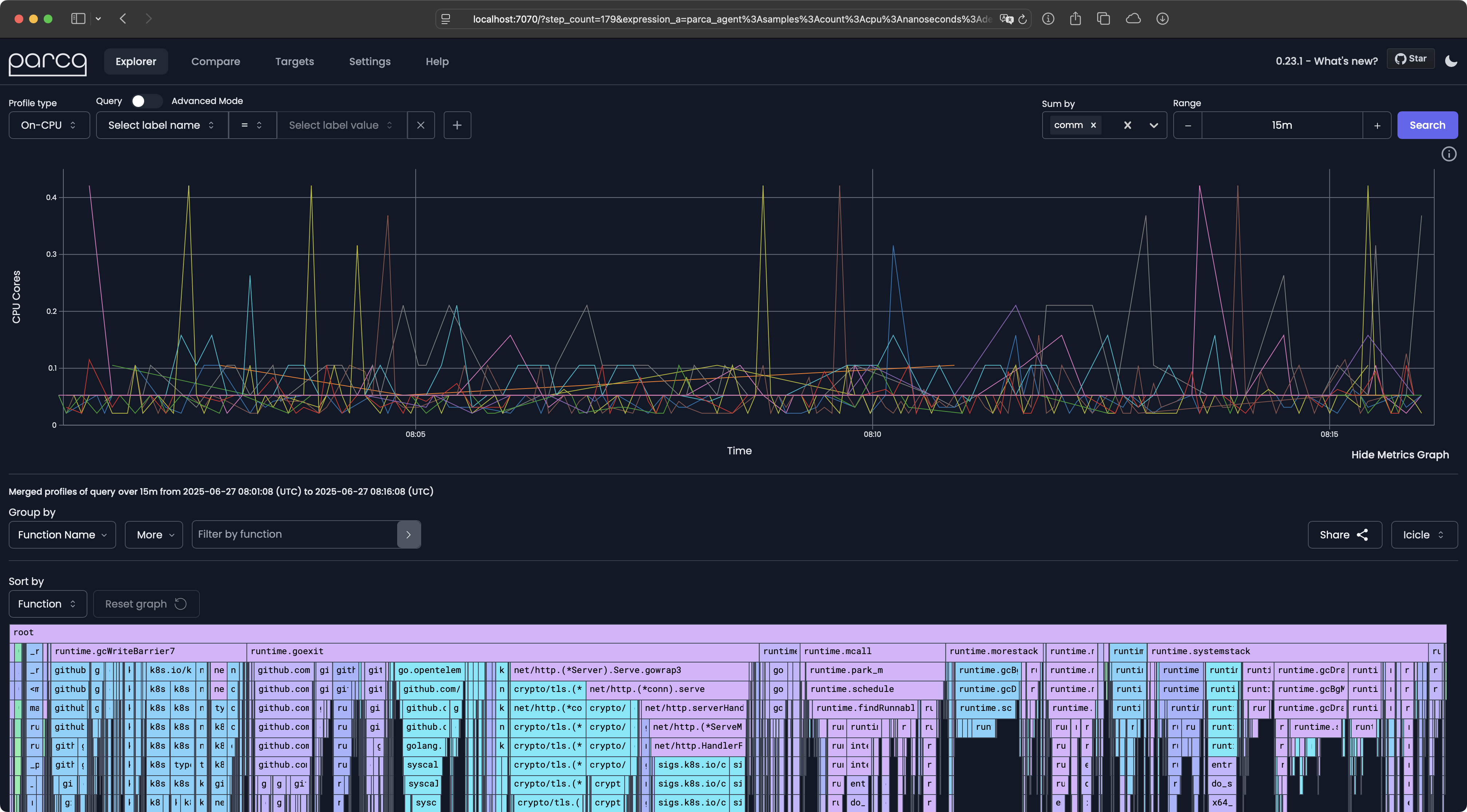

Continuous Profiling Using Parca

In today's blog post, we will examine continuous profiling using Parca. We will set up Parca in a Kubernetes cluster, explore its architecture, and collect profiles from an example application. Finally, we will review the Parca UI to analyze the collected profiles. If you are not familiar with continuous profiling, I recommend reading the "What is profiling?" section in the Parca documentation.

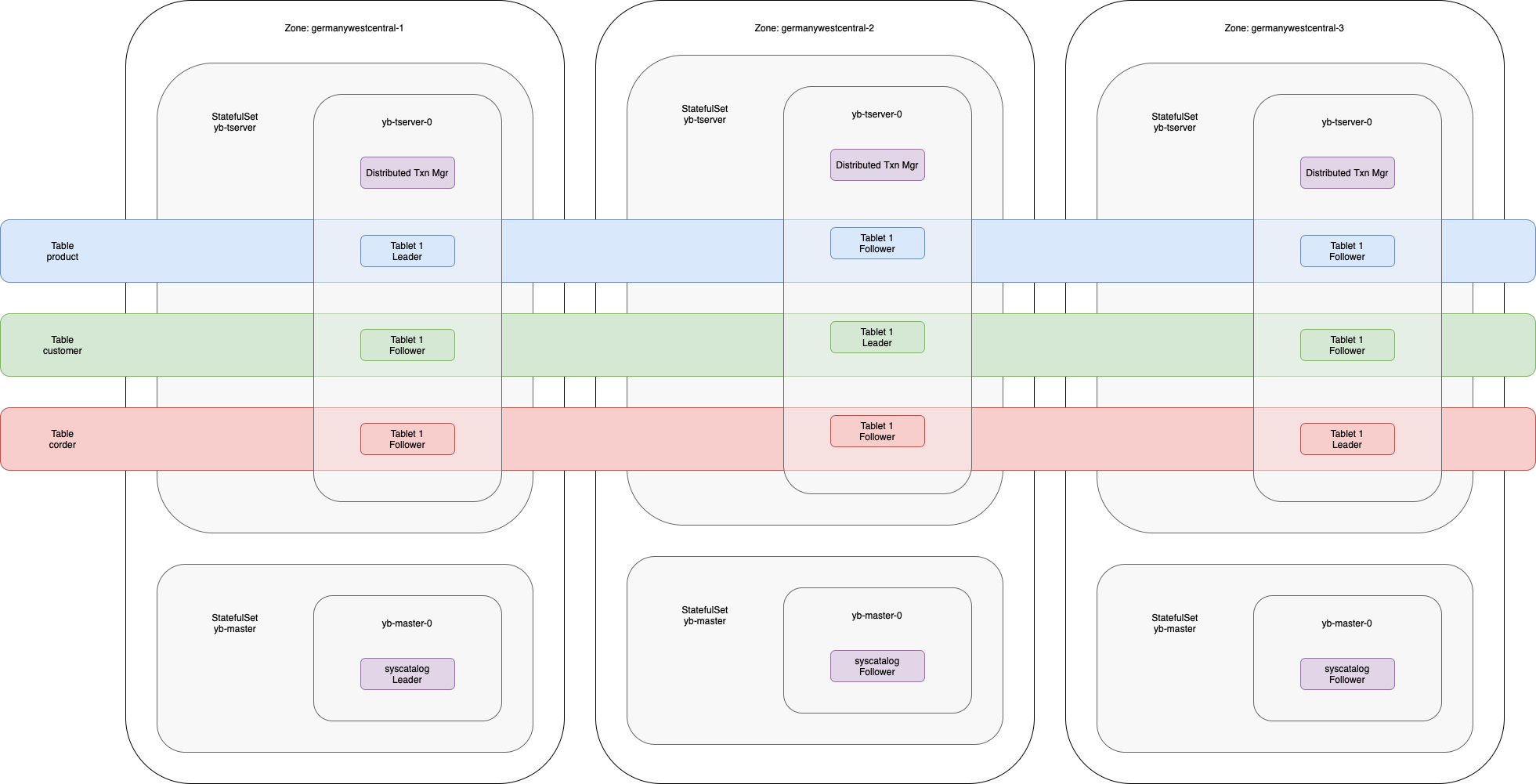

Read moreDeploy YugabyteDB on a Multi-Zone AKS Cluster

After getting started with YugabyteDB in the last blog post, I wanted to explore how to set up a zone-aware YugabyteDB cluster. To do this, we will create a multi-zone AKS cluster and use the standard single-zone YugabyteDB Helm Chart to deploy one-third of the nodes in the database cluster across each of the three zones.

Read more- Continuous Profiling Using Parca (2025-06-28)

- Deploy YugabyteDB on a Multi-Zone AKS Cluster (2025-04-13)

- Getting Started with YugabyteDB (2025-04-13)

- Getting Started with Vitess (2025-04-10)

- Use OpenTelemetry Traces in React Applications (2025-03-29)

- Use a VPN to Access your Homelab (2025-03-24)

- Use Cloudflare Tunnels to Access your Homelab (2025-03-16)

- Mac mini as AI Server (2025-03-14)

- Mac mini as Home Server (2025-03-09)

- Convert HTML to PDF / PNG with Puppeteer (2025-03-04)

- Neovim: Custom snacks.nvim Picker (2025-03-03)

- Neovim: Extend snacks.nvim Explorer (2025-03-02)

- My Dotfiles (2025-03-01)

- Welcome to My New Website (2025-02-23)